Dynamics365 and the Dataverse

Make an environment in Power Platform

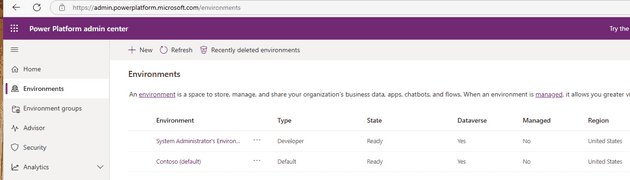

If you are attempting this without any created before hand then go to https://admin.powerplatform.microsoft.com/environments and make a new environment.

I made a “Developer” type environment and I ticked on the option to have a Dataverse with sample data.

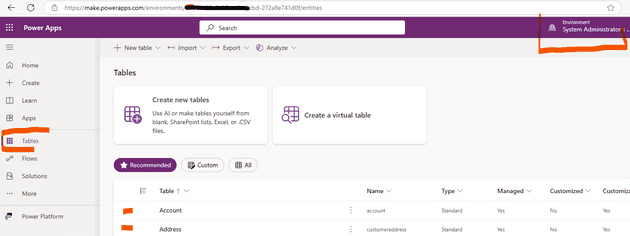

Once you have it set up, it will usually take about 15 minutes to spin up and create the sample data. You can then go to make.powerapps.com. In there you will see a tab on the left called “Tables”. These are your tables for the Dataverse. There is an environment switcher at the top right of the page.

You will need to see the tables in this view above so that you can see the changes you will be making when calling the API endpoints.

Service Principal set up for the Dataverse

In order to use the API you need a service principal. Go ahead and create an Application Registration in Entra ID. Create a client secret and keep it safe for now, you will put this in a Key Vault soon.

Add this new principal to your Power Platform environment, but clicking on the elipses, then going to Settings/User + permissions/Application Users.

When you are on this page, you will see a + New app user button near the top left. I had some issues adding mine as a new app user as the Business Unit didn’t have a drop down.

I copied the business unit name from another user in my list and copied it into the pop up box that comes up when adding a new user. I added all security roles to the service principal.

Link a Keyvault to your Databricks

Go to https://adb-[uniquenumberhere].azuredatabricks.net/#secrets/createScope

The page that loads will ask for your Key Vault details, go ahead and add your key vault deatils.

In the Azure Portal on your Key Vault, you will have to add the AzureDatabricks enterprise application (which exists in the Entra ID) as a Key Vault Secrets User in the IAM (RBAC blade).

You can see some details about your “Scope” as they call it, this is the name you would have given when setting up the Key Vault. Try this line of code in Databricks dbutils.secrets.list("kvgeneral56")

Your Scope name will be different to mine “kvgeneral56”. You can also see a list of scopes by dbutils.secrets.listScopes()

Coding in Databricks

Doing a GET request to the Dataverse

To keep it simple I just tested this idea by doing a select with a filter on a specific account name which I know exists. You will see this in the code below.

import requests

import json

# Azure AD authentication

tenant_id = '316xxxxx-c4fa-41da-96d8-33d84a140dd1'

client_id = '351xxxxx-bade-49d7-a066-83c6ada4afad'

client_secret = dbutils.secrets.get(scope="kvgeneral56", key="spn-fbrc-us1-clientsecret")

resource = 'https://org6xxxx548.crm.dynamics.com/'

auth_url = f'https://login.microsoftonline.com/{tenant_id}/oauth2/token'

auth_data = {

'grant_type': 'client_credentials',

'client_id': client_id,

'client_secret': client_secret,

'resource': resource

}

response = requests.post(auth_url, data=auth_data)

access_token = response.json().get('access_token')

api_url = f'{resource}/api/data/v9.2/accounts?$select=name,address1_composite&$filter=name eq \'Yo Moshi\''

headers = {

'Authorization': f'Bearer {access_token}',

'Content-Type': 'application/json'

}

response = requests.get(api_url, headers=headers)

# Convert the response to JSON and extract account ID

data = response.json() # Parses the response content as JSON

account_id = data['value'][0]['accountid'] # Extract the account IDDoing a PATCH request to the Dataverse

A Patch request is an update action.

- I get the accountid of the record from Dataverse (In this case I am looking for Yo Moshi)

- I get a name from the “nameo” column from my Delta Lake tables called

db1.peeps - Extract the name value as a Python string

- I then call the API PATCH and do an update to the account record with that accountid in the Dataverse

# Use the account ID from the response in the previous command window

print(f'Account ID: {account_id}')

# Step 1: Query the Delta table

delta_query = "SELECT nameo FROM `azdbrclint1`.`db1`.`peeps`"

delta_df = spark.sql(delta_query)

# Extract the name value as a Python string

name_from_table = delta_df.collect()[0]['nameo'] # Assumes at least one record exists

print(f"Name from Delta Table: {name_from_table}")

# Step 3: Update the account record (PATCH)

update_url = f'{resource}/api/data/v9.2/accounts({account_id})'

patch_headers = {

'Authorization': f'Bearer {access_token}',

'Content-Type': 'application/json',

'If-Match': '*' # Allows update regardless of concurrency

}

# Data to update

patch_data = {

"name": name_from_table # Name fetched from Delta table

}

patch_response = requests.patch(update_url, headers=patch_headers, data=json.dumps(patch_data))

# Check the response

if patch_response.status_code == 204:

print("Update successful!")

else:

print(f"Update failed: {patch_response.status_code}, {patch_response.text}")